Sidekiq kind of revolutionized background job processing in ruby.

By providing very fast, threaded workers and inventing it’s own profitable Open-Source business model, it is really something to look at.

This article will not take a business oriented look at sidekiq though.

Any application will, at some point, require a sensitive refactoring that you want to have fully tested before shipping it.

Canary deployments will allow us to do that.

Canary what?

From the Martin Fowler blog

Canary release is a technique to reduce the risk of introducing a new software version in production by slowly rolling out the change to a small subset of users before rolling it out to the entire infrastructure and making it available to everybody.

In the context of sidekiq, a canary deployment will allow us to make changes to our workers, and have only a small percentage of jobs use the new version. If one job fails because of the change, sidekiq will retry it, highly probably in your main worker, preventing the error from happening again.

It will allow you to monitor a refactoring for any unexpected break, rollback easily (you just need to bring the percentage of jobs using the canary to 0) and promote the change to all workers once you are ready.

Sidekiq-canary

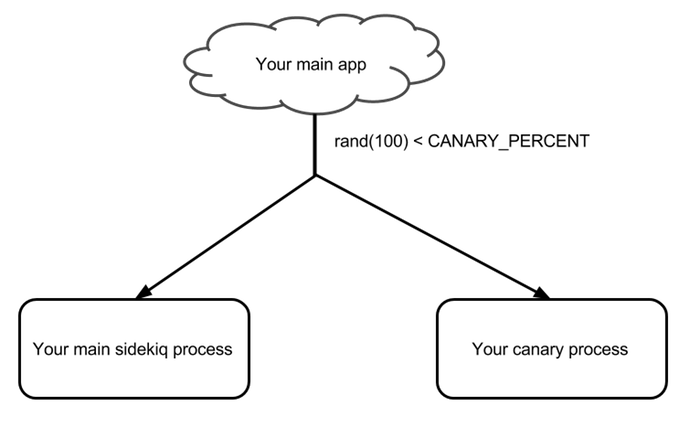

Sidekiq Canary will allow you to do canary deployments with sidekiq. We could represent it as the following:

Your main app will trigger sidekiq jobs, as it currently does.

Right now, your main sidekiq process executes those jobs.

Using Heroku, this sidekiq runs on the same app as your main one, except it’s a

different process type.

Your canary process will be a different app, so you can deploy it without having to deploy your main app. It will need to connect to the same redis instance, so it shares the same jobs queue.

However, you need to have this canary worker execute the default_canary queue instead of default.

You can achieve this with the following:

bundle exec sidekiq -q default_canary

Once this architecture is setup, you can set the DEFAULT_CANARY_PERCENT

environment variable on your main app to an integer between 0 and 100.

Once you have defined this environment variable, all jobs on the default queue will have X of 100 chances of running on your canary. All other jobs will run on your main process.

While your canary runs, you can monitor failures or any other related metrics to make sure no edge case have been broken by this change.

When you feel confident enough about the change, you can fully deploy it to your main app and have it used by all jobs.

Gotchas

Sidekiq canaries will pick jobs randomly. This means it is awesome for refactorings. But not so much for customer-facing or database changes as both the old version and the new one need to work.

Upgrading to the newest version of a library would be an excellent use case.

Changing the actions you perform on user registration (from sending an email to

sending a text for example) wouldn’t so much, as users would sometimes receive

one and sometimes the other.

Faim

Canary deployments are a an awesome way to improve your platform’s stability as they will allow you to experiment new changes while reducing the risk that they could entirely break your service.

You will probably want to get more from sidekiq-canary though, to monitor which

job happens in what queue, or allow some jobs to always run on the canary even

if the percentage is at 0.

If you look at the sidekiq-canary code, it is extremely simple on purpose. Fork

away and adapt to your needs!